Discovering Apache Kafka: A Simple Introduction

Apache Kafka is a distributed event streaming platform. It is used for high-performance data pipelines, streaming analytics, data integration, and mission-critical applications.

What does it mean by a streaming platform?

- Creating real-time data streams

- Processing real-time data streams

Kafka acts as a distributed streaming platform, allowing you to publish, subscribe, store, and process streams of records in real-time.

How Does it work?

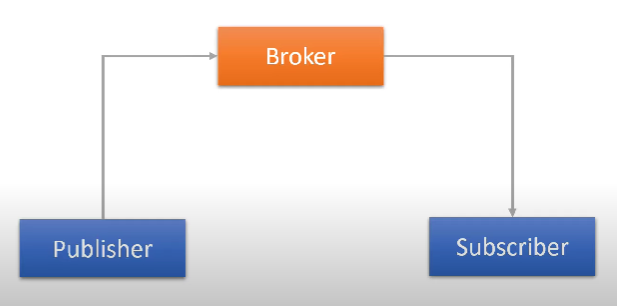

Kafka adopts a pub/sub messaging system architecture and works as an enterprise-level messaging system. A typical messaging system has at least three components:

- Publisher: sends data records called messages.

- Message broker: receives data from the publisher and stores it.

- Subscriber or consumer: reads data messages from the broker and processes them.

Kafka Components

- Kafka Broker : Central server system

- kafka Client : producer and consumer API library

- Kafka Connect : data integration library

- kafka Streams : library to create realtime data processing application

- KSQL : realtime database

So let's begin learning Kafka by learning some key concepts first.

Kafka Key Concepts

- Producers: Applications that publish data to Kafka topics are producers. In simple words, it is an entity in the Kafka ecosystem that produces data, for example, (sensors in IoT, software applications, etc.).

- Consumers: Applications that subscribe to topics and read, process the data are known as consumers. In simple words, we can say that a consumer is a Kafka entity that reads data from a Kafka broker.

- Brokers: A broker is a middleman that interacts with publishers and subscribers. As shown in the diagram above, we can say that Kafka is a broker.

- Cluster: A cluster is a group of computers, each running one instance of the Kafka brokers.

- Topic: Topics are logical partitions of data in Kafka. If you have a database background, you can compare them with tables in a database.

- Partitions: Divisions of a topic for scalability and parallelism, or in simple words, we can say that further classification of topics is called partitioning. Kafka itself doesn't create partitions. As Kafka architects, we need to mention them ourselves while creating a topic.

- Partition Offset: Sequential identifiers assigned to messages within a partition. In simple words, we can say that it is a unique sequence ID of a message in a partition. It is automatically assigned by the broker to a message. It is immutable and cannot be changed. For every partition, it will start at 0 in increasing order.

- Consumer Group: It is a group of consumers which we can form to share the workload. In Kafka, consumer groups jointly consume a topic.

- Replication: Data redundancy for fault tolerance.

- Zookeeper: Coordinates and manages Kafka brokers.

- Connectors: Used to integrate Kafka with external systems.

- Event Streaming: To understand what is "event streaming", we can compare it with the human body's central nervous system. So technically speaking, event streaming is capturing data in real-time from event sources like databases, sensors, mobile devices, cloud services, and software applications in the form of streams of events; storing these event streams durably for later retrieval; manipulating, processing, and reacting to the event streams in real-time as well as retrospectively; and routing the event streams to different destination technologies as needed.

To grasp this concept, let's consider a scenario. Imagine you own a library with over 10k books. You decide to hire a librarian named Kafka. As soon as Kafka starts, he organizes all your books into different sections, which he calls Topics. Each topic can have multiple shelves, known as partitions, to hold more books and allow multiple readers to access them simultaneously for easier browsing. Authors, or producers, continuously publish new books, and Kafka diligently places them on the shelves based on their genre or type.

Now, there are avid readers, or consumers, who have formed book clubs, called consumer groups, based on their preferred reading genres. These consumer groups jointly read books from the shelves. Each reader keeps track of the last page they've read, known as offsets, so they can easily pick up where they left off next time.

To ensure that every book is always available, the library maintains copies, or replications, in different rooms called brokers. Kafka, the librarian, hires a manager, or ZooKeeper, to oversee these brokers and ensure the smooth operation of the library.

Special tunnels, or connectors, connect this library to other libraries, enabling book exchanges. Similarly, Kafka connectors integrate Kafka with external systems, facilitating seamless data exchange.

Let's delve into two compelling use cases that exemplify Kafka's versatility and impact:

1. Real-time Payment Processing

In the fast-paced world of finance, every moment counts. Apache Kafka plays a pivotal role in processing payment transactions in real-time for payment gateways, banks, and financial institutions. By seamlessly integrating Kafka into their systems, these organizations ensure swift, secure, and reliable processing of transactions. Whether it's authorizing credit card payments or transferring funds between accounts, Kafka enables businesses to handle high volumes of transactions with ease and efficiency, enhancing customer satisfaction and driving business growth.

2. IoT Data Management

The Internet of Things (IoT) ecosystem is characterized by a vast network of interconnected devices generating massive volumes of data. Apache Kafka emerges as a cornerstone for capturing, processing, and analyzing sensor data in real-time within IoT ecosystems such as automated farms, IoT warehouses, and automated storage houses. By leveraging Kafka's capabilities, organizations gain actionable insights into sensor readings, enabling them to optimize operations, enhance decision-making, and drive innovation. Whether it's monitoring soil moisture levels in agriculture or managing inventory in logistics, Kafka empowers businesses to harness the full potential of IoT technologies and unlock new opportunities for growth.